BBR (Bottleneck Bandwidth and Round-trip propagation time) is a TCP congestion control algorithm developed by Google that replaces the default cubic algorithm on Linux systems. Unlike cubic, which reacts to packet loss, BBR actively measures available bandwidth and round-trip time to optimize data transmission. This approach delivers better throughput on high-bandwidth, high-latency connections such as cloud servers, VPS instances, and international network links where packet loss can occur without actual congestion.

This guide covers checking your current congestion control algorithm, verifying BBR availability, enabling BBR through sysctl configuration, and confirming the change takes effect. You’ll also learn when BBR provides the most benefit, how to test performance improvements, and how to revert to cubic if needed.

When to Use BBR

BBR delivers the most significant performance improvements on high-bandwidth, high-latency connections where the default cubic algorithm struggles. The algorithm measures actual network capacity rather than reacting to packet loss, making it particularly effective when bandwidth-delay product is high or when networks experience packet loss that doesn’t indicate congestion.

Consider BBR for the following scenarios:

Where BBR Delivers the Biggest Gains

- Cloud servers and VPS instances serving international traffic

- Long-distance network connections (cross-country or intercontinental)

- High-bandwidth links with occasional packet loss

- Content delivery and streaming servers (e.g., Jellyfin, Plex)

- Satellite or wireless networks with variable latency

When Cubic Remains the Better Fit

- Local network connections with low latency

- Desktop systems used primarily for browsing

- Environments with strict compatibility requirements

- Systems running legacy applications sensitive to congestion control changes

Ultimately, the difference between BBR and cubic becomes most apparent on connections with round-trip times exceeding 50ms and bandwidth above 100Mbps. On local networks or low-bandwidth connections, you may see minimal improvement.

Verify BBR Availability

Before enabling BBR, verify your system supports it and the required kernel module is available.

Users running older systems or seeking performance-optimized kernels may benefit from installing the HWE kernel, XanMod kernel, or Liquorix kernel, which include the latest BBR improvements and optimizations.

Before enabling BBR, first check your kernel version:

uname -rThe output shows your kernel version:

5.15.0-122-generic

BBR requires a Linux kernel version 4.9 or newer. If your output shows an older release, update through standard system upgrades or switch to a hardware enablement (HWE), XanMod, or Liquorix kernel before proceeding.

Next, verify BBR is available as a congestion control option:

sysctl net.ipv4.tcp_available_congestion_controlThe output lists available algorithms. You should see “bbr” in the list:

net.ipv4.tcp_available_congestion_control = reno cubic bbr

If BBR doesn’t appear in the list, the tcp_bbr kernel module may not be loaded. Load it manually:

sudo modprobe tcp_bbrThen, confirm the module loaded successfully:

lsmod | grep tcp_bbrYou should see the tcp_bbr module listed. If the module fails to load or BBR remains unavailable after loading the module, your kernel may lack BBR support and requires an update.

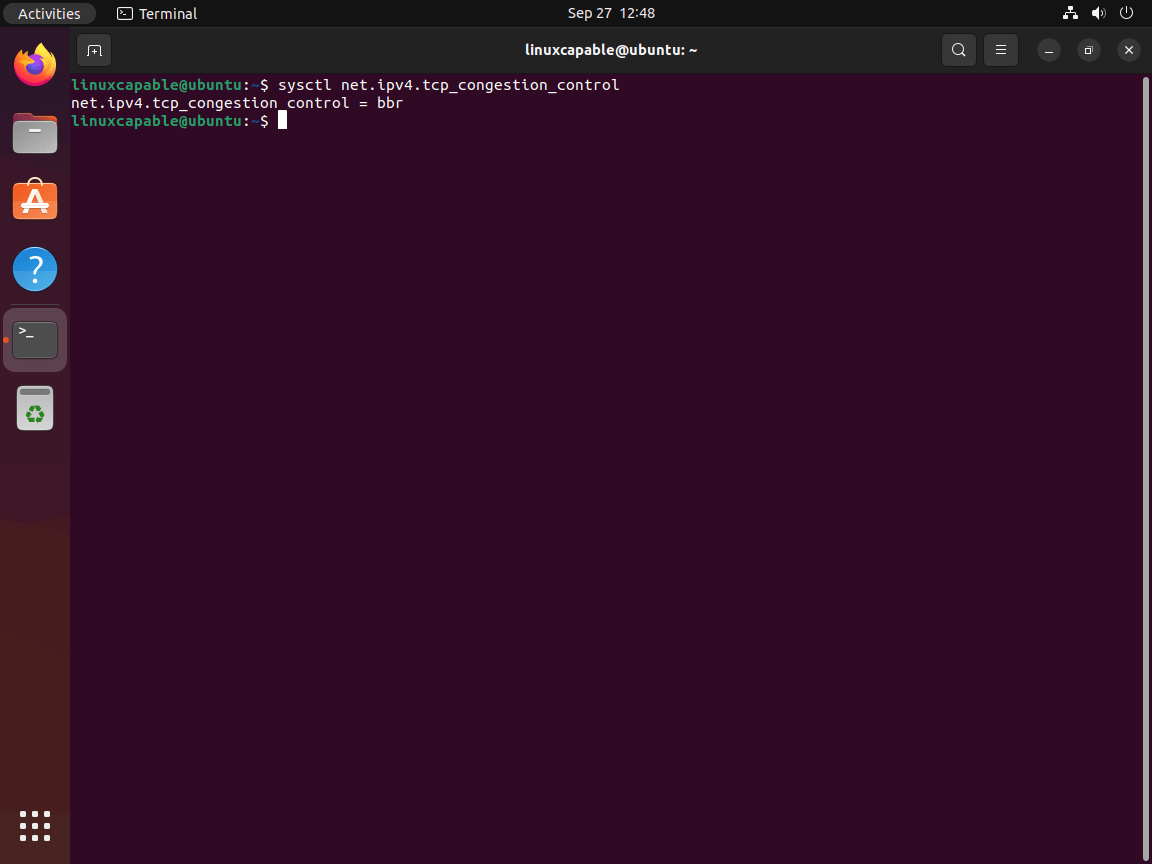

Check Current Congestion Control Algorithm

Before enabling BBR, first check which congestion control algorithm your system currently uses:

sysctl net.ipv4.tcp_congestion_controlThe output displays the active algorithm. Most Ubuntu systems default to cubic:

net.ipv4.tcp_congestion_control = cubic

Configure BBR in Sysctl

To enable BBR, you’ll need to modify the sysctl configuration file, which controls kernel parameters. First, open the file with nano:

sudo nano /etc/sysctl.confNext, add these two lines to the end of the file:

net.core.default_qdisc=fq

net.ipv4.tcp_congestion_control=bbrThe first line sets the queueing discipline to fq (Fair Queue), which BBR requires for optimal performance. The fq scheduler ensures fair bandwidth distribution across multiple flows and works with BBR’s bandwidth-probing mechanism. The second line activates BBR as the congestion control algorithm. These settings apply to new TCP connections established after the configuration is loaded. Save the file with Ctrl + O and exit with Ctrl + X.

Apply BBR Changes

After saving the configuration file, apply the changes without rebooting:

sudo sysctl -pThis command reloads the sysctl configuration and activates BBR immediately. The output displays the applied settings:

net.core.default_qdisc = fq net.ipv4.tcp_congestion_control = bbr

Verify BBR is Enabled

Once the changes are applied, confirm BBR is now active:

sysctl net.ipv4.tcp_congestion_controlThe output should show BBR as the active algorithm:

net.ipv4.tcp_congestion_control = bbr

Additionally, verify the fq queueing discipline is active:

sysctl net.core.default_qdiscThe output confirms fq is set:

net.core.default_qdisc = fq

Finally, check that the BBR module remains loaded:

lsmod | grep tcp_bbrYou should see the tcp_bbr module in the output. If all three checks pass, BBR is fully operational on your system.

Test BBR Performance

Measuring BBR’s impact requires comparing network performance before and after enabling it. While comprehensive testing demands tools like iperf3 and controlled network environments, you can perform basic validation with standard utilities.

For example, test download speed and timing with curl:

curl -o /dev/null -w "Download speed: %{speed_download} bytes/sec\nTime total: %{time_total}s\n" https://speed.hetzner.de/1GB.binFirst, run this test with cubic enabled, then again after switching to BBR using the same remote file. The example pulls Hetzner’s 1GB.bin object; swap the URL for any large file appropriate for your testing needs. Another reliable option is Linode’s Fremont mirror if you prefer a North American endpoint. Compare download speeds and total time. BBR typically shows improvement on high-latency connections (50ms+ round-trip time) but may show minimal difference on local or low-latency links.

Following this, you can monitor active connections and congestion control in real-time:

ss -tinThis displays TCP connection information including the congestion algorithm in use. Look for “bbr” in the output to confirm active connections use BBR.

For production servers, observe sustained transfer performance over days or weeks through application logs, monitoring dashboards, or bandwidth graphs. BBR’s benefits become most apparent under sustained load on long-distance connections.

Revert to Cubic

If BBR causes issues or doesn’t provide expected benefits, you can easily revert to the default cubic algorithm. First, open the sysctl configuration file:

sudo nano /etc/sysctl.confNext, locate and remove or comment out these lines:

net.core.default_qdisc=fq

net.ipv4.tcp_congestion_control=bbrTo comment them out instead of deleting, add a # at the beginning of each line:

#net.core.default_qdisc=fq

#net.ipv4.tcp_congestion_control=bbrOnce complete, save the file and apply the changes:

sudo sysctl -pAlternatively, you can set cubic explicitly without editing the file:

sudo sysctl -w net.ipv4.tcp_congestion_control=cubicThen, verify cubic is active:

sysctl net.ipv4.tcp_congestion_controlThe output confirms the change:

net.ipv4.tcp_congestion_control = cubic

Troubleshooting Common Issues

BBR Missing After Reloading sysctl

First, check that the tcp_bbr module loaded successfully:

lsmod | grep tcp_bbrIf this returns nothing, manually load the module:

sudo modprobe tcp_bbrTo ensure the module loads automatically at boot, add it to the modules configuration:

echo "tcp_bbr" | sudo tee -a /etc/modules-load.d/bbr.confThen, verify the configuration file was created:

cat /etc/modules-load.d/bbr.confBBR Module Fails to Load

If modprobe tcp_bbr fails, check your kernel supports BBR:

modinfo tcp_bbrIf this command returns “not found,” your kernel lacks BBR support. Update your kernel through standard system updates:

sudo apt update && sudo apt upgrade -yAlternatively, consider upgrading to the HWE kernel for the latest kernel version with improved BBR support.

BBR Settings Revert After Reboot

Verify your sysctl.conf changes are properly formatted and saved. Check the file contents:

grep -E 'bbr|fq' /etc/sysctl.confYou should see both configuration lines:

net.core.default_qdisc=fq net.ipv4.tcp_congestion_control=bbr

If the lines are missing or incorrect, edit the file again and reapply:

sudo nano /etc/sysctl.conf

sudo sysctl -pNo Noticeable Performance Gain

BBR provides the most benefit on high-bandwidth, high-latency connections. Test your connection latency to determine if BBR applies:

ping -c 10 google.comIf average latency is below 50ms, BBR may show minimal improvement. Local network transfers, low-bandwidth connections, or links with minimal packet loss may show negligible improvement. Test performance on long-distance connections or during peak traffic periods for more accurate assessment.

Application Compatibility Problems

Some older applications or network equipment may behave unexpectedly with BBR. Monitor system logs for connection errors:

sudo journalctl -xe | grep -i "connection\|timeout"If you notice connection problems, timeouts, or degraded performance after enabling BBR, temporarily revert to cubic for testing:

sudo sysctl -w net.ipv4.tcp_congestion_control=cubicCompare application behavior with both algorithms to identify whether BBR causes the issue. Therefore, test BBR on non-critical systems first before deploying to production environments.

Conclusion

BBR replaces cubic’s loss-based congestion control with bandwidth and latency measurements, delivering better throughput on high-latency networks. Your system now uses BBR for TCP connections after verifying kernel compatibility, loading the tcp_bbr module, setting fq as the queueing discipline, and applying the sysctl configuration. This optimization improves performance on long-distance or packet-loss-prone networks where cubic struggles to maintain efficient data transmission.

Does this work on Ubuntu 25.04?

Yes, BBR works on LTS and short-term release cycles of Ubuntu desktop and server currently.